"If voice is the future of computing, what about those who cannot hear or speak?"

This is the question software developer Abhishek Singh asked himself before deciding to provide an answer using AI, Deep Learning, and a webcam to create an app that allows Alexa to understand sign language.

Tech companies have been slowly pushing apps that allow users with speech impediments to interact with voice assistants – like Voiceitt and Tecla. But there is barely a handful of solutions for those who are deaf or have hearing loss.

Back in 2013, Microsoft trialed its motion-sensing Kinect to recognize gestures as PC inputs. Although the project was left to dry once the Kinect was discontinued. Fast forward to 2017, NVIDIA and KinTrans explored using AI to translate sign language into on-screen captions.

This year, Amazon released the Echo Show which allows users to interact with Alexa using the touch-screen. So it appears Amazon is at least beginning to consider more accessible forms of input.

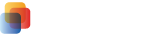

For Singh's "thought experiment", he enlisted Tensorflow.js, Google's Machine Learning (ML) framework. He then hooked an Amazon Echo to his laptop and trained the ML model by repeatedly gesturing American Sign Language (ASL) in front of the webcam.

Once the AI recognized his gestures, Singh plugged in Google's text-to-speech capabilities so he could sign things like, "Alexa, what's the weather today?" and watch his question be transcribed and read out loud to Alexa.

Alexa's response would then be fed back into the system and transcribed into text for the user to read.

Credit: Abhishek Singh

It may seem like a "roundabout" solution, but it's still progress for the deaf community – whom have yet to see similar efforts from major tech companies.

The app remains a proof-of-concept and only recognizes basic signs. Singh told The Verge that it’s relatively easy to add new vocabulary and will open-source the code.

“By releasing the code people will be able to download it and build on it further or just be inspired to explore this problem space,” he tells The Verge. (You can watch the demo and download the project link on his website.)

Although Singh's solution is far from perfect, it's a clear demonstration that accessible voice tech is technically possible. It's just a matter of tech giants like Google and Amazon figuring out how to take Singh's concept and build it into their voice assistants.

“If these devices are to become a central way in which to interact with our homes or perform tasks, then some thought needs to be given to those who cannot hear or speak,” Singh said. “Seamless design needs to be inclusive in nature.”

Want to learn more about accessible voice tech? Read our post on how voice assistants are changing how people with disabilities get things done.

.png)

VOICE Copyright © 2018-2022 | All rights reserved: ModevNetwork LLC